Erfan Aasi

I'm a Postdoctoral Associate at the Computer Science and Artificial Intelligence Laboratory (CSAIL) at MIT, working with Professors Daniela Rus and Sertac Karaman. My research focuses on advancing the safety and intelligence of robotic systems, with a particular emphasis on autonomous vehicles. By integrating the strengths of classical deep learning algorithms with the emerging capabilities of large language models, I aim to create robust systems that can navigate complex environments, interpret human intent, and adapt to unexpected scenarios.

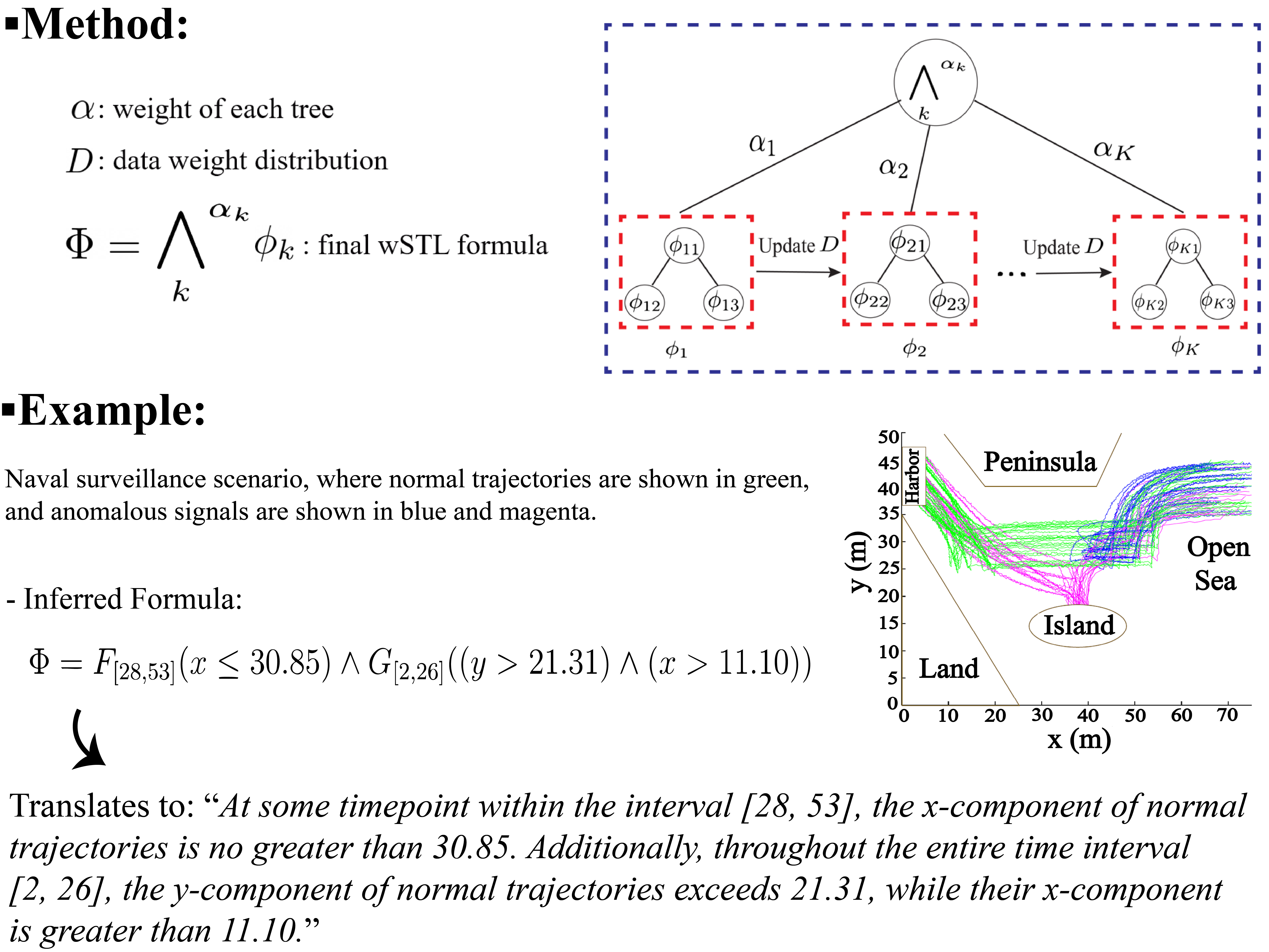

Research Interests: Safe Autonomy, Interpretable Decision-Making, Deep Learning and Language Models

![]()